Since the release of generative artificial intelligence (genAI) tools like ChatGPT in the fall of 2022, it has become clear that this technology will change many aspects of our lives. Tasks like reading and writing can be made much easier with genAI chatbots to help summarize, analyze, and create. We can debate the ethics of this shift and its potential impact on society, but it is foolish to try to stop the integration of AI. From iPhones to Microsoft Word to Netflix and Google Maps, AI is here.

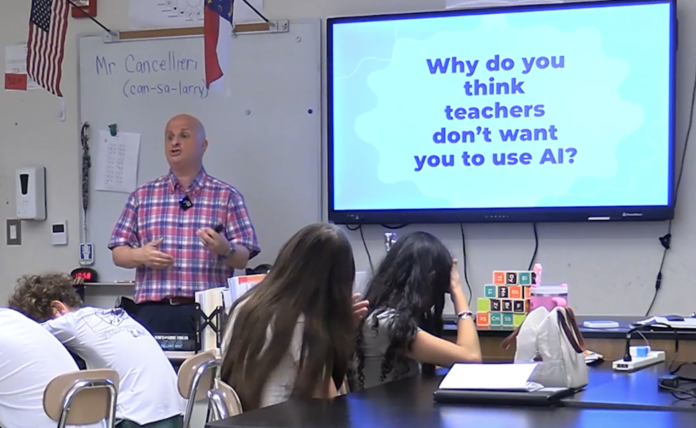

As educators, we owe it to our students to help prepare them for an AI-assisted world. Just as schools began to teach students how (and when) to use pocket calculators once they became readily available, so must we take the lead in showing students how to use genAI tools effectively and ethically. Students need to understand the pitfalls of leaning too heavily on AI to solve problems and the consequences that may come from misrepresenting AI-generated content as their own. There is a fine line between getting inspiration or feedback and copying and pasting, and it’s our job to help students learn where that line is.

To achieve this goal, secondary teachers must build a culture of ethical AI use in their classrooms. Begin by encouraging and rewarding transparency regarding how genAI is used in schoolwork. Continue by setting clear expectations about how much support (and how much of the finished work) students can receive from AI on a given assignment. Include clear and consistent consequences if students try to pass off AI-generated content as their own. These actions, when taken together over a semester or a year, will help students internalize the “rules of the road” when it comes to their interactions with genAI. Let’s look at those actions.

Teach transparency

The most basic expectation for students using AI in ways that don’t hinder their learning is for them to admit when they use it. We can encourage this openness by demonstrating how we as educators use genAI tools in our work. When I get the help of ChatGPT to brainstorm ideas for kicking off a new unit, I tell my students what I did and how I did it. Sure, this requires some vulnerability, but it reinforces the idea that it’s okay to get help when you need it if you acknowledge that help.

To this end, when AI is allowed on an assignment in my class, I provide my students with strategies for documenting how AI was used; and instructions for submitting that documentation with their final work. I make a point of reminding students that this process helps them take responsibility and avoid being accused of cheating. Documenting AI use also helps us recall the prompts that worked well so we can use them again.

Set clear expectations

My middle school students — like most teenagers — thrive in my classroom when they have a solid understanding of the learning goals and expectations. A good tool for this purpose is Leon Furze’s AI Assessment Scale, which provides clear language and visuals to identify which of four different levels of AI assistance is allowed on a particular assignment. Using this model, we focus on the purpose of the assignment and the role that genAI tools can play in completing it, and students know from the outset how much help they are allowed to get from the AI.

Let students know what happens if they cheat

Enforcing any classroom policy successfully requires that all stakeholders (students, teachers, administrators, and families) be aware of the expected behavior and the consequences of poor decision-making.

Establish the rules early, communicate them clearly, and make sure that the punishments are appropriate. Enter into any discussion of the possibility of AI cheating with an open mind and a willingness to forgive, but also with the best evidence you can provide. If students know what will happen if they take credit for AI-generated content, and understand the rationale, they are far more likely to see the benefits of using genAI tools appropriately.

A note on AI detection

Using tech tools to know the difference between student-generated and AI-generated content may seem like the most fundamental part of enforcing ethical AI use in your classroom, but it is not.

First, you need to know that there is no such thing as a reliable and accurate AI detector. In the absence of such a tool, your best “detector” is your familiarity with your student’s work. If your students write for you often — without access to AI or other assistance — you will be far more likely to recognize when they stray from your expectations and try to submit AI writing as their own.

And the kind of close supportive relationship you develop when you frequently read and provide personalized feedback on their work is the best tool you can have when addressing this issue in your classroom. Students (and families) will respond better when cheating concerns arise if you have established a supportive atmosphere and consistent and fair AI policies.

Interested in learning more?

I am hosting a webinar, “Creating Not Copying: Teaching Students to Use AI Ethnically” on Thursday, Feb. 27, 2025, at 5 p.m. EST. The session is the first of three workshops for the spring semester of Not Your Average PD, an online free professional development series for educators presented by the Kenan Fellows Program for Teacher Leadership at N.C. State University.